Before scanning for personal data in your systems, you need to understand the technical aspects of your system components, the types of files you need to scan, and which services you have direct control over.

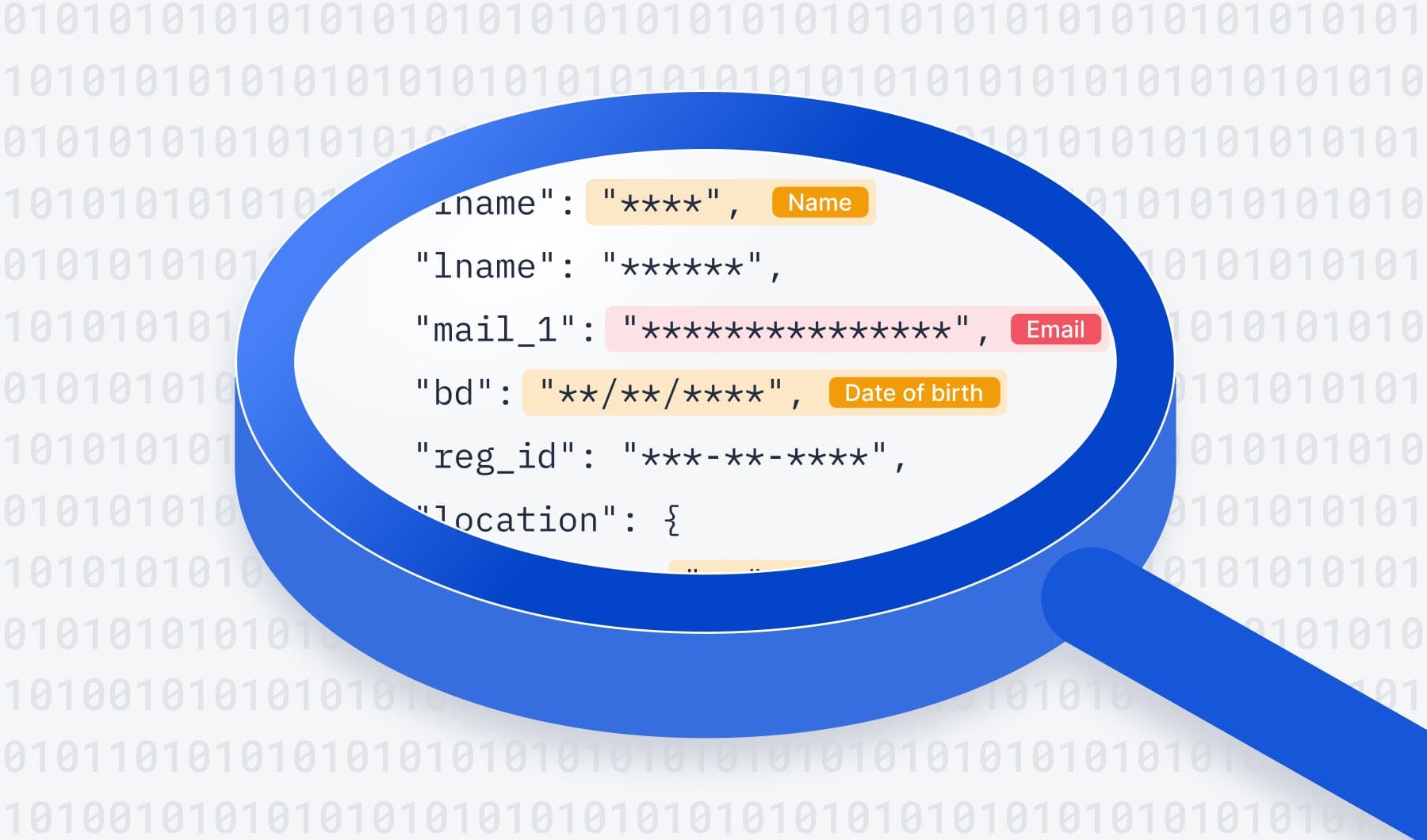

Aside from scanning and detecting data, you also need to be ready to interpret what you find and understand its importance.

Storage of data systems will be split across the different services you use. The types of services your company uses come in two kinds: ones you can control (controlled) and ones you can’t (uncontrolled).

Let’s take a look at them in more detail.

There are two types of services that you are able to control:

Stateless are the most popular type of services. This is because you want to split your data storage and data processing. This will allow you to scale because stateless services just need access to data to function, meaning you can just link them up to the databases without creating additional storages. These types of services process the most amount of data.

When dealing with stateless services you need to be able to analyze incoming and outgoing data on the fly. With these services you can only scan the traffic, but by doing so there are limitations. For example, if you use a data loss prevention (DLP) service like the one provided by Google (stateless because it doesn’t store any data) and want to discover personal data in the cloud, you will need to create your own additional services (e.g. proxy) for traffic management with the DLP. It’s also worth noting that these DLPs are also third party services. If the DLP provides you with reports on specific users (which most likely is the case), you will have to state this usage in your privacy policy.

Stateful services are databases and include the likes of Postgres, MySQL, and sometimes cloud storage too. For stateful services, you also need to be able to analyze the data stored as it flows in and out.

We can use traffic analysis here and it will work just as does for stateless, but if you want to analyze historical data: you will need to write a script or use a special function to scan (on top of your traffic analysis) and how this function works and is written will depend on the type of storage you use. Moreover, in this instance you are just carrying out traffic analysis, and this doesn’t tell you anything about what data is there; just about what changes have been made to it.

Some types of storage make analyzing traffic easy but others are more difficult. Different storages have different interfaces and some are made for ease of use, but not all of them. For example in MySQL you need to create your own data structure, meaning that every time you add a new storage you will need to create a non-generic script to analyze it.

On top of controllable services, there are also uncontrollable services, also known as third-party services. Third-party services are the external SaaS tools you purchase.

Examples include Salesforce, Hubspot, Zendesk, etc. You cannot control these services. For example, if you employ Zendesk and a user wants to ask a question, they write in a special form for Zendesk and then you will check this support issue from Zendesk’s interface. You cannot control how Zendesk stores or processes this data.

When sharing data with third-party services (which is what you do when you use them), you generally can’t scan for personal data because the interface won’t always provide the functionality to scan for personal data. This means you need to be sure about the safeguards that they have in place to protect data.

So now we know what we need to be scanning, let's take a look at how it is done.

First of all, we need to bear in mind that there are requests (get requests) which don’t contain personal data (because get requests have no body). Then there are responses that could contain personal data and post requests that could contain personal data as a request and as a response.

The code below is an example of how you can analyze personal data in your stateless services:

In this example, we analyze personal data without middleware. Middleware is a specific pattern in an HTTP server that can be used to analyze the responses irrespective of whether you are sending responses or receiving requests. With middleware you can analyze the responses/requests after all handlers.

However, this approach isn’t great because if you want to create new stateless services you will need to write new code for data analysis and this new code could affect the throughput of the service latency and even CPU usage because it is a different load for the server.

Basically, it’s better to use a traffic analyzer for the network layer; not scan data on your application layer.

Analyzing traffic is the best way to understand what is going on in your systems. Traffic analysis allows you to see what data is coming in and out, instead of just seeing what is stored.

However, traffic analysis also has its issues: you need to push the traffic through an additional analyzer or monitoring tool. To keep your analysis and your systems working well, you need to select an instrument that:

One of the most important points above is the latency factor. Your business will have a maximum latency factor and you will probably have some functionality which requires execution as fast as possible. This means that things should be executed in milliseconds and you just won’t be able to place a traffic analyzer as an extra hurdle.

That’s not to say there is no way around this though. You could just build extra infrastructure to accommodate the analyzer, but that is easier said than done and will involve resources that you just may not have.

On that note, it is worth mentioning that your infrastructure is changing all the time anyway as you scale or add new functionality to your product. In this case, you will need to increase the size of the stateless structures so that they can cope with increased traffic. Otherwise: if you are scaling up and you need your traffic to pass through an analyzer, you will find that the traffic will pile up at this bottleneck, slowing your system down.

Your traffic can be pretty unpredictable. Finding yourself in the news, a new marketing campaign you weren’t told about, or an explosion of users for whatever reason will lead to a spike in traffic. You need to be able to deal with this traffic; not have something throttling it.

The other thing is that the end price that you sell your product to users for depends on your baseline costs. If they go up because you are having to implement a proxy, which means you have introduced some complex architecture or other: either you will have to put your prices up or you will have to tolerate a drop in profits. Plus, if you are using tools which process data at variable costs depending on the data volume (like DLP), costs may run out of control.

There are 2 approaches to storage analysis:

For new data, we can use a traffic analyzer. Historical data refers to data which is already in your database and to scan this you will need to create scripts for data analysis.

Services like databases, relational and nonrelational databases, and other storage usually offer you an interface through which you can carry out a full scan of the storage, analyze new files, and update files. However, each database has its own approach to scanning, analyzing, and updating, meaning you often have to create custom logic to carry out actions with the storage.

This type of scanning can also have a negative effect on the cost and throughput of your databases. Moreover, analysis only really makes sense when you have a specific task at hand, for example, scanning for and analyzing personal data.

Traffic analysis is the best way to scan personal data because you can create a process for analyzing personal data without having to do it by hand. Moreover, you don’t need to get developers involved to write scripts so that you can understand where the data actually is. However, like everything: it depends on your needs. If you need to see what data you store (historical) and not just hot data, you will need to employ a scanning tool.

Both techniques allow for automation, meaning you remove the human factor and don’t need to educate your engineers on what is or isn't personal data so that they can write the programs to search for it.

Scan your personal data in transit with this free tool